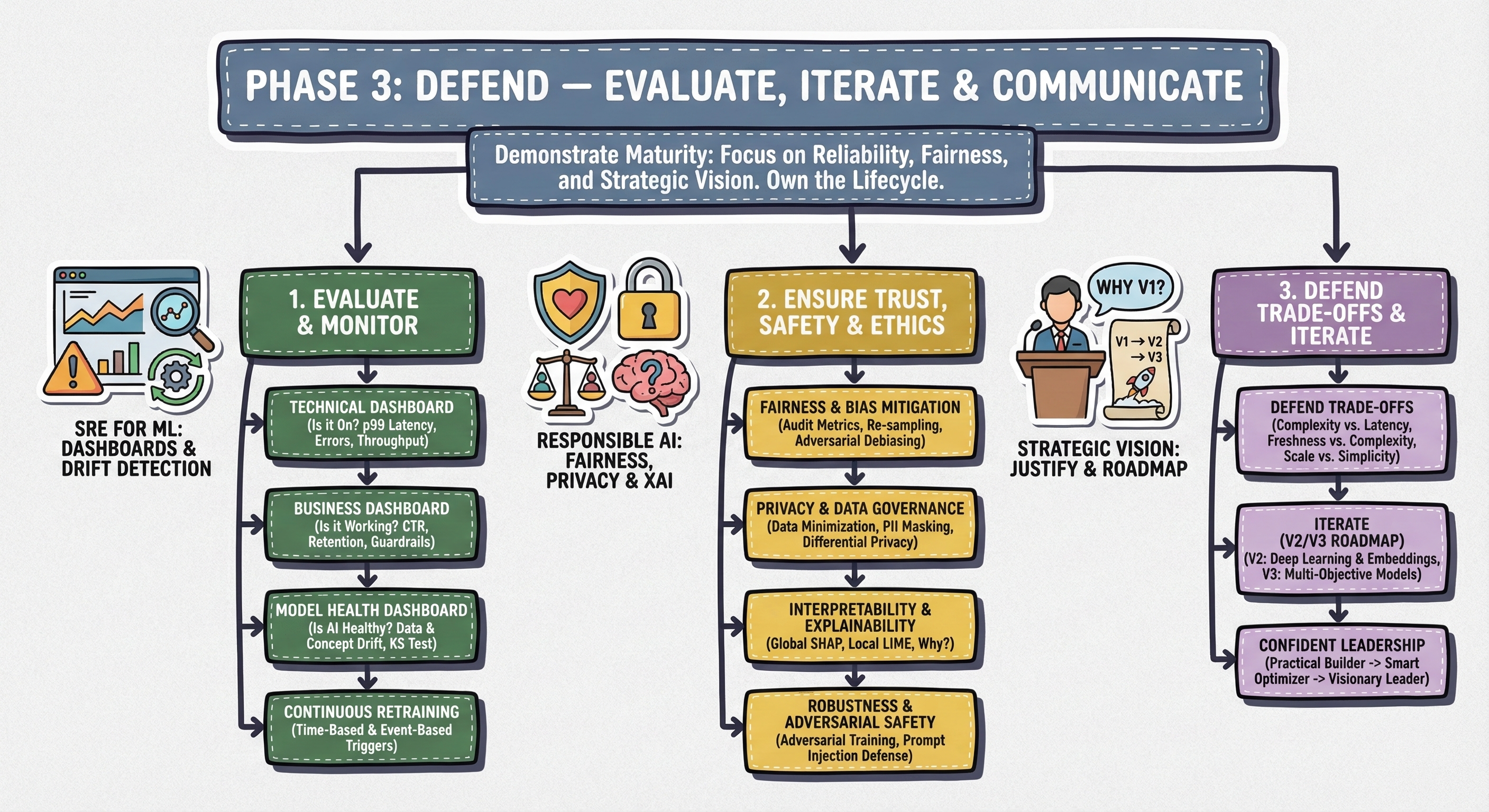

Phase 3: DEFEND — Evaluate, Iterate & Communicate

Demonstrate maturity by focusing on reliability, fairness, and explainability.

DEFEND is the final stage of the interview, designed to test your seniority and maturity as an engineer. After you've metaphorically "built" the system in Phase 2, you must now prove you can own it for its entire lifecycle.

The focus shifts from pure technical architecture to demonstrating maturity by focusing on reliability, fairness, and strategic communication. This phase proves you're not just a builder, but a responsible, senior-level leader.

This phase consists of three steps:

Evaluate & Monitor: You'll design the dashboards and alerts to ensure the model stays healthy, accurate, and reliable in production, proving you can handle "model drift."

Ensure Trust, Safety & Ethics: You'll proactively address the system's impact on users, covering critical topics like fairness, bias, privacy, and explainability.

Defend Trade-offs & Iterate: In this "grand finale," you'll confidently justify why you made your design choices and then show your strategic vision by laying out a roadmap for V2 and V3.

3.1. Evaluate & Monitor

Alright, you did it. You designed the system, you trained the model, you ran the A/B test (in Step 6), and your new model (Model B) won. It's now live and serving 100% of traffic.

This is the exact moment many candidates stop. They "pop the champagne" and consider the interview over. This is a massive mistake.

Your interviewer is now testing your seniority. A junior engineer ships a model. A senior engineer owns the model for its entire lifecycle. Your new model is now a living, breathing part of production, and it will break. The real world is not static; it will change, and your model's performance will decay. This is known as "model drift."

Your goal in this step is to prove you are an "SRE for ML." You must design the system of dashboards, alerts, and feedback loops that ensures your model stays healthy, effective, and safe—and that tells you exactly when it's time to retrain or roll back.

I call this the "Three Dashboards" approach. You need to monitor your system's health at three levels.

1. Dashboard 1: Technical (SRE) Monitoring (Is it On?)

This is pure, classic backend engineering. This dashboard has nothing to do with AI. It just answers: is the service functional? If this dashboard turns red, pagers go off at 3 AM.

Key Metrics:

p99 Latency: Is our service meeting the <200ms budget we defined in Step 2? If this creeps up, it means our model is getting too complex or our feature lookups are too slow.

Error Rate (5xx): Is our service crashing? Are we seeing

NullPointerExceptionsbecause a feature in the Online Feature Store suddenly went missing?Throughput (RPS): Are we auto-scaling correctly with traffic?

Alerting: "We will have PagerDuty alerts. If the p99 latency exceeds 200ms for more than 5 minutes, or the 5xx error rate crosses 0.1%, an engineer is paged. This is our first line of defense."

2. Dashboard 2: Business/Product Monitoring (Is it Working?)

This is what your Product Manager stares at all day. This dashboard answers: is the model still achieving the business goal we set in Step 1?

Key Metrics:

These are your A/B test metrics: CTR, D7 Retention, Time Spent, Conversion Rate.

These are your Guardrail Metrics: "Report Post" Rate, "Hide Post" Rate, User Appeals (for fraud).

Alerting: "This is how we catch silent failures. A model can be 100% healthy (Dashboard 1 is green) but be serving terrible recommendations. We need 'slow-burn' alerts. For example: 'Alert if 1-hour rolling CTR drops 10% below the 7-day average.' A sudden drop here is a high-severity incident and could mean we're serving non-personalized content."

3. Dashboard 3: Model Health Monitoring (Is the AI Healthy?)

This is the most critical ML-specific dashboard. This is where you, the AI engineer, will spend your time. It answers: is the real world changing in a way that is making my model stale? This is all about detecting drift.

Data Drift (or Feature Drift):

What it is: The inputs to your model have changed. The real world no longer looks like your training data.

Example for Fraud Detection (Q19): "A new iPhone launches. Suddenly, our 'device_type' feature, which was a categorical enum, has a new value our model has never seen before. Our service might crash (a 5xx error!) or, worse, it might default that feature to

nulland silently give a terrible score."How to monitor: "Our data pipeline (Step 4) won't just serve features; it will also log them. We need a monitoring job that compares the statistical distribution of live features against the training set's feature distribution. We'll use a Kolmogorov-Smirnov (KS) test for numerical features and a Chi-Squared test for categorical ones. If the statistical distance for a key feature (like 'transaction_amount') crosses a threshold, we alert. This is a direct signal of data drift."

Concept Drift:

What it is: This is more subtle. The relationship between the inputs and outputs has changed.

Example for Content Moderation (Q14): "A new, coded hate-speech term emerges. The features of the text ('word_count', 'emojis_used') look the same (no data drift), but the meaning has changed. Our model, trained on old data, now incorrectly classifies this new hate speech as 'safe'."

How to monitor: "This is much harder to detect. The best way is to monitor your model's performance on the most recent ground-truth labels. Our system must have a tight feedback loop. For example, 'User reports a post' or 'A human moderator labels a transaction as fraud'. We must immediately join that new label with the prediction our model made. If we see our model's 24-hour rolling Precision or Recall suddenly drop on this fresh data, we have a clear signal of concept drift."

Closing the Loop: Continuous Retraining Strategy

Monitoring is useless if you don't act. The final part of your answer is to define your retraining strategy.

Time-Based Retraining (The Baseline): "Our primary strategy will be time-based retraining. A new version of the model will be automatically trained every single night on the last 30 days of data. This is simple, robust, and automatically catches 'slow' drift in user behavior or content."

Event-Based Retraining (The "Scramble" Button): "For severe drift, a daily cadence is too slow. If our monitoring system (Dashboard 3) fires a high-severity alert for data or concept drift, it should automatically trigger a new training pipeline run. This is crucial for domains like Spam Detection (Q22) or Adversarial Attacks (Q17), where attackers adapt in hours, not days."

By designing this end-to-end monitoring and retraining loop, you've shown you're not just building a static model. You're building a strong, self-healing system that can adapt to the real world. That is what senior AI engineering is all about.

3.2. Ensure Trust, Safety & Ethics

You've built a system that is accurate, scalable (Phase 2), and monitorable (Step 7). Now, you must answer the most important question of all: is it responsible?

This is the "front-page-of-the-newspaper" test. If your model's worst-case failure was published on the front page, could you defend your design? In today's world, this is no longer an optional add-on. For any senior-level interview, this is a mandatory part of the discussion. This is where you prove your maturity.

This step shows you understand that "accuracy" is not the only, or even the most important, metric. The real-world impact of your system on users and society is most important. Your interviewer is testing your ability to be a responsible steward of the company's technology and reputation.

You need to show you have a concrete, proactive plan. I recommend breaking your answer down into these four pillars.

1. Fairness & Bias Mitigation (The "Is it Fair?" Test)

This is the most critical area. AI models are "prediction machines" that are trained on historical, real-world data. Because our world has historical biases, your model will learn them and will amplify them if you do nothing.

Detection (The Audit): "We cannot fix what we don't measure. In Step 1, we defined fairness as a guardrail metric. Here, we implement it. Our evaluation pipeline (Step 7) won't just report a single, global accuracy score. It must calculate disaggregated metrics for key slices."

Example for Job Recommendations (Q4): "We will measure the 'recommendation rate' and 'application rate' across protected categories like gender and ethnicity. We must ensure our model shows high-paying job opportunities equitably to all qualified candidates, not just the majority group it was trained on."

Example for Content Moderation (Q14): "We'll measure the False Positive Rate (FPR) for different dialects or accents. It's a known industry problem that models incorrectly flag non-mainstream dialects (like AAVE) as 'toxic' at a much higher rate. We must measure this and treat a high FPR for one group as a P0 bug."

Mitigation (The Fix): "We can intervene at three stages:"

Pre-processing (In our Data Pipeline - Step 4): We can use re-sampling (e.g., oversampling under-represented groups) or re-weighting (giving more importance to samples from minority groups) in our training data.

In-processing (During Training - Step 5): We can use advanced techniques like adversarial debiasing or constrained optimization, where we add a fairness metric directly into the model's loss function.

Post-processing (At Inference - Step 6): As a simpler, more direct method, we can apply different prediction thresholds for different groups to ensure their outcomes are equitable.

2. Privacy & Data Governance (The "Is it Creepy?" Test)

Our systems are fueled by user data. How do we use that data without violating user trust or the law (like GDPR or CCPA)?

Data Minimization: "Our first principle is to use as little sensitive data as possible. We'll conduct a review to ask: 'Do we really need this feature?' For Healthcare AI (Q12), we would never train on raw names or addresses."

PII Masking & Anonymization: "Our Data Pipeline (Step 4) must have an automated PII detection and masking service. All sensitive fields (SSNs, names, etc.) must be hashed, salted, or replaced with non-reversible tokens before they are ever saved in our training data lake."

Advanced Techniques (Bonus Points): "For extremely sensitive data (like in healthcare or finance), we can propose Differential Privacy, which adds statistical noise to our data or queries to make it mathematically impossible to re-identify any single individual. Or, we could even explore Federated Learning, where the model trains on the user's device, so the raw personal data never leaves their phone."

3. Interpretability & Explainability (XAI) (The "Why?" Test)

This is essential for building trust, debugging your model, and meeting legal requirements (like the "right to explanation").

Global Interpretability: "We need to understand our model as a whole. This is why we chose XGBoost (Step 5) as our baseline. It's not a black box. We can use SHAP values or simple feature importance plots to see which features globally drive predictions. This helps us catch 'shortcut learning,' where the model is keying off a spurious correlation (e.g., 'zip code' being the #1 predictor of fraud)."

Local Interpretability: "We must be able to explain a single decision. For Fraud Detection (Q19), if a user's transaction is blocked, our customer service team needs a reason. Our system can't just say 'model said no.' It must provide a local explanation, like 'This transaction was flagged due to an unusual location and a high transaction amount.' Tools like LIME or individual SHAP values can provide this."

4. Robustness & Adversarial Safety (The "Can it be Hacked?" Test)

This is the "security" of your AI. Bad actors will try to attack your system.

Classic Adversarial Attacks: "For Image Moderation (Q14), attackers will add tiny, invisible-to-humans noise to an image to make our classifier see a 'cat' instead of a 'gun'. We can combat this with Adversarial Training, where we proactively 'attack' our own models during training and teach them to be resilient."

LLM Safety (Modern): "For any LLM-based system (Q11, Q17), the risks are even higher. We must defend against Prompt Injection (a user tricking the model into ignoring its instructions) and Jailbreaking (a user trying to get the model to produce unsafe, hateful, or dangerous content)."

Mitigation: "We must build input/output firewalls. Our system will have a pre-processing step to sanitize user prompts (e.g., stripping certain commands) and, more importantly, a post-processing step that filters the LLM's final output to ensure it doesn't contain hate speech, PII, or unsafe instructions before it ever reaches the user."

By covering these four pillars, you are proving you're not just a technician. You are a mature, responsible engineer who understands that trust is the ultimate metric.

3.3. Defend Trade-offs & Iterate

This is your "grand finale." You've designed the entire system, from objective to monitoring. The interview is winding down. The interviewer will lean back and hit you with what sounds like a simple, conversational question:

"This is a great design. What are the biggest risks?"

"What would you do differently if you had 6 more months?"

"Why did you choose an XGBoost model instead of a deep learning one? Wouldn't a Transformer be better?"

Make no mistake: this is not a softball question. This is the final and most important test of your knowledge.

An immature engineer hears this and gets defensive. They backtrack, get flustered, or try to argue that their design is perfect. A mature engineer smiles. They've been waiting for this. They understand that engineering is nothing but a series of trade-offs.

Your goal here is not to be "right." It's to be thoughtful. You must show you're a strategic leader who sees the full picture. You will confidently justify why your V1 design is the right starting point, and then you'll show your vision by laying out a roadmap for V2 and V3.

1. Defend Your Trade-offs (The "Why" Behind Your Build)

This is where you tie everything back to the objectives and constraints you defined in Phase 1. You must prove that every major decision you made in Phase 2 was a deliberate choice to optimize for one thing, even at the cost of another.

This is your "strong-form" argument. Never apologize. Justify.

Trade-off: Model Complexity vs. Latency & Cost

Interviewer: "Why a simple LightGBM model for your ranker (Step 5)? A complex, multi-headed Transformer would give you better offline accuracy."

Your Answer: "That's an excellent point. A large deep learning model would likely give us a 2-3% lift on our offline NDCG metric. But as we defined in Step 2, our p99 latency budget is 200ms and our inference cost per user is a key constraint. My LightGBM model meets that latency and cost budget, is highly interpretable (which supports our Safety goal in Step 8), and delivers 95% of the business value today. I am intentionally trading a small, potential accuracy gain for a massive, guaranteed win in reliability, speed, and cost-effectiveness. That is the right business decision for our V1 launch."

Trade-off: Data Freshness vs. Engineering Complexity

Interviewer: "Your dual online/offline feature pipeline (Step 4) is very complex. Why not just use a simple daily batch job for all features?"

Your Answer: "We absolutely could start with a batch-only system, and it would be simpler to build. But our core business objective (Step 1) for this News Feed is to increase engagement by reacting to real-time user intent. If we only use batch features, the user's clicks from five minutes ago are invisible. The feed will feel stale. I am deliberately taking on the higher engineering complexity of a streaming pipeline because it directly serves our primary business goal. The V1 must feel responsive."

Trade-off: Scalability vs. Simplicity

Interviewer: "A two-stage (retrieval/ranking) architecture seems like overkill. Why not just a single, powerful model?"

Your Answer: "At a smaller scale, a single model would be perfect. But our constraint (Step 2) is billions of items. It is computationally impossible to score all billion items for every user in real-time. My two-stage architecture is a classic trade-off: The retrieval model trades precision for speed, finding the 'best 1,000' items in 30ms. The ranking model then trades speed for precision, applying our full, feature-rich logic to just those 1,000. This is the only proven pattern to solve this problem at that scale."

2. Iterate (The "V2/V3 Roadmap")

You've defended V1. Now, you show your vision. This is how you turn any "weakness" into a "future opportunity."

Interviewer: "What are the biggest limitations of your design?"

Your Answer: "That's the best part of this design—it's built to iterate. The V1 I've laid out is the robust foundation. Once we're stable and have our monitoring (Step 7) in place, here is my roadmap for V2 and V3."

V2: Smarter Features & Models

"My V1 model was a LightGBM. Now that our pipelines are proven, V2 is our Deep Learning phase. I would start by building a Wide-and-Deep DNN. This lets us combine the memorization power of our existing categorical features (the 'Wide' part) with the generalization power of deep embeddings (the 'Deep' part). This will be our first big accuracy jump."

"I'd also enhance our Online Feature Store. In V2, we'll build more sophisticated long-term user embeddings (e.g., from a two-tower model) and serve them in real-time. This combines deep user history with their 5-minute intent."

V3: A Multi-Objective, Next-Gen System

"My V1 model optimized for a single metric, like 'probability of click' (Step 5), because it's a simple, strong signal. But our real business goal (Step 1) is 'long-term retention', which is a composite of many things."

"In V3, I would move to a Multi-Objective Model. This model would be trained to simultaneously optimize for:

Positive Engagement (e.g., likes, shares, long dwell time)

Negative Feedback (e.g., 'hide post', reports)

Diversity & Novelty (to prevent a 'filter bubble')

Fairness (our guardrail metric from Step 8)

This multi-objective model is the ultimate expression of our system. It moves from a simple proxy metric to directly training on the complex, nuanced business goals we defined at the very beginning."

By ending this way, you've left the interviewer with zero doubts. You've proven you're a practical builder (V1), a smart optimizer (V2), and a visionary leader (V3) all in one. You've given them a complete, confident, and senior-level answer from start to finish.